To make AI art, you take a few billion images, puree them into a fine mathematical slurry, and then assemble new art from the flecks of expression and authorship floating in the mixture.

This raises interesting copyright questions! Is AI Art like the first amoeba crawling forth from primordial ooze: something entirely new made from existing molecules? Or is it more like T2: a puddle reforming into the same old monster?

This post digs into the Andersen v. Stability copyright lawsuit, and explains relevant aspects of copyright law and fair use, and also offers some commentary on the plaintiffs’ litigation strategy.

Contents:

AI Art Raises Three Big US Copyright Questions

AI art generators sample billions of images to train their models. And these training images are often scraped right from the internet. This raises three big copyright questions:

(1) Is the AI training input copyright infringement? Is it infringement to copy billions of images from the internet to train your AI?

(2) Is the AI art output copyright infringement? Do the images created by the AI infringe the original art used in the training process?

(3) Can the author of AI-generated art claim copyright ownership to that work of art? Does the guy who types in “seattle starry night” own the copyright to the resulting image?

Andersen v. Stability (complaint here) is a case about the first two questions.

There is also a crucial international aspect to this case. The LAION dataset used by Stability was created in Germany by the Machine Vision & Learning research group (CompVis) at LMU Munich. German law seems to explicitly allow copying for the purposes of creating AI training data. (discussed in English here). Did the relevant copying all take place in Germany? Is there a US copyright remedy for plaintiffs here? For now, I’ll assume the copying happened in the US, and use this complaint as an opportunity to discuss US copyright law.

The Andersen v. Stability Copyright Complaint

Sarah Andersen makes online comics. If you’ve ever been online, you’ve seen her art. Sarah, like many artists, is not happy that her art was used as part of a training data set for an AI art generator.

So Sarah filed a copyright infringement lawsuit in federal court on January 13, 2023. The complaint accuses some AI companies (Stability AI, Midjourney, DeviantArt) of copyright infringement for scraping billions of images from the internet (including Sarah’s art) to train AI models.

This is a complicated lawsuit. It’s a class action. It makes several types of legal claims. I’m going to focus on the copyright claims.

Are Art Generators A 21st-Century Collage Tool?

Plaintiffs’ complaint leads with its strongest fact:

Stability downloaded or otherwise acquired copies of billions of copyrighted images without permission to create Stable Diffusion”.

As you might expect, massive copying tends to be bad for a copyright defendant. Plaintiff builds this fact into its main theme:

AI image generators are 21st century collage tools that violate the rights of millions of artists.

In general, it is good to have a litigation theme, and the theme should help simplify complex technical and legal issues. However, there are two problems with this theme.

Collages are often fair use. For example, both Blanch v. Koons (2nd Cir. 2006) and Carious v. Prince (2nd Cir. 2014) found that many types of collage art (made by humans) is fair use. So one argument the defendants might make in reply is “sure we make collages and that is very much allowed.”

The theme also gets the technology wrong, or at least stretches the definition of “collage”. The machine learning model is not really like a collage.

Is Copying An Artist’s Style Copyright Infringement?

After discussing how copying art to train AI is bad, plaintiff argues that the AI output art is, itself, also bad:

(5) These resulting derived images compete in the marketplace with the original images. Until now, when a purchaser seeks a new image “in the style” of a given artist, they must pay to commission or license an original image from that artist. Now, those purchasers can use the artist’s works contained in Stable Diffusion along with the artist’s name to generate new works in the artist’s style without compensating the artist at all.

I’m sympathetic to the human artists here. But as a matter of copyright law, I don’t think you need to buy a license to make art in the style of another artist. Prof Ed Lee suggests that a style of art is copyrightable: “Case law does recognize that artistic style can be copyrighted” That is wrong, or perhaps imprecisely worded. A “style” isn’t copyrightable because it isn’t fixed in a tangible medium of expression.

On the other hand, if you were to copy both the subject and the style of an artwork, that likely copyright infringement (e.g. draw Mickey Mouse in the style of Disney). But applying an artist’s famous style to a totally new subject may be fine. The question, as always, is whether the new art is “substantially similar” to the original art. An artist’s “style” is one aspect of that larger inquiry.

The complaint here suggests that copying just the style of an artist, on its own, is infringement. To me, that seems like a stretch.

Is Every AI Artwork A Derivative Work Of The Training Set?

The owner of a copyright has the exclusive right to create derivative works. If you create a derivative work that is substantially similar to the original, then you are (probably) infringing.

The Andersen complaint argues that anything an AI model spits out is a derivative work:

(90) The resulting image is necessarily a derivative work, because it is generated exclusively from a combination of the conditioning data and the latent images, all of which are copies of copyrighted images. It is, in short, a 21st-century collage tool.

This strikes me as wrong. Is a collage necessarily a derivative work? I don’t know. But I do know that several courts have looked at collages and found them non-infringing . At a minimum, a collage is not necessarily an infringing derivative work.

Some More Difficult Facts For Plaintiffs

This admission in paragraph 93 seems important:

(93) In general, none of the Stable Diffusion output images provided in response to a particular Text Prompt is likely to be a close match for any specific image in the training data. This stands to reason: the use of conditioning data to interpolate multiple latent images means that the resulting hybrid image will not look exactly like any of the Training Images that have been copied into those latent images.

A big part of copyright infringement is that the copy needs to look pretty similar to the original. If I were litigating a copyright case, I’d try pretty hard not to say “none of the pics here are really a close match”. On the other hand, a good lawyer will address a bad fact head on, and try to frame it in the best light possible. Perhaps that’s the strategy here.

[update 1/31/2023]

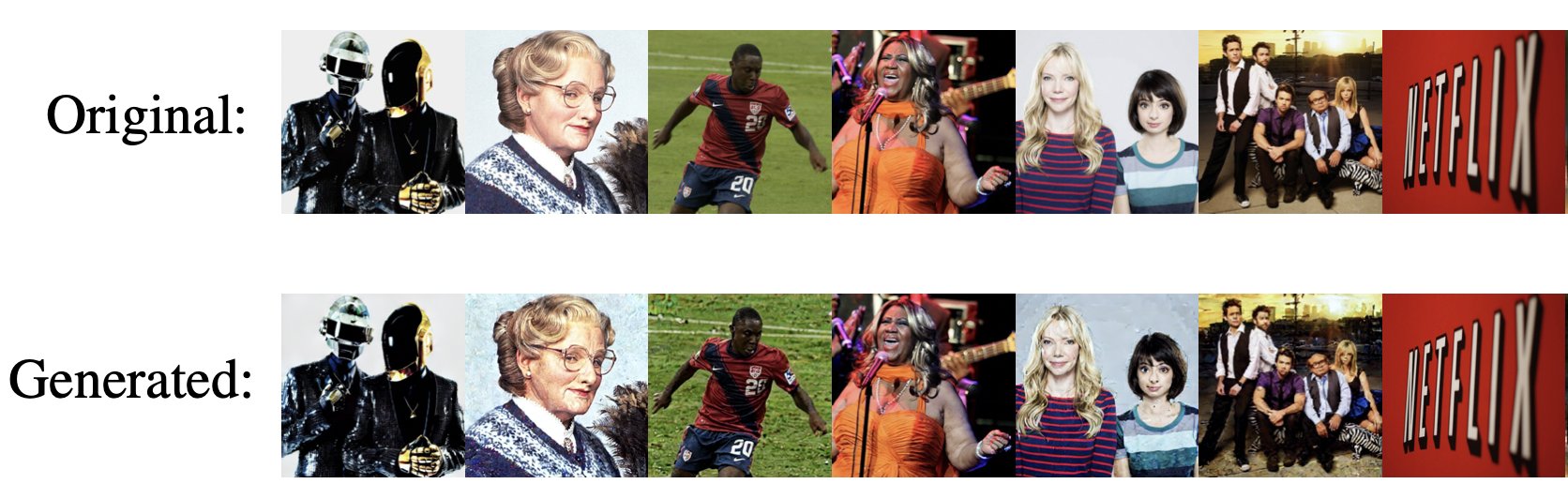

Some researchers suggest that AI diffusion models will output images that are very similar to the original training data if multiple instances of the images are present in the training data.

If I were plaintiffs’ counsel, I’d be pasting these image comparisons all over my complaint.

If I were defendant’s counsel, I’d be telling my clients to carefully de-dupe all their training images, to minimize the chances of output images being exact matches of input images.

Another difficult fact is addressed in paragraph 97:

(97) A latent-diffusion system can only copy from latent images that are tagged with those terms. The system struggles with a Text Prompt like “a dog wearing a baseball cap while eating ice cream” because, though there are many photos of dogs, baseball caps, and ice cream among the Training Images, there are unlikely to be any Training Images that combine all three.

(98) A human artist could illustrate this combination of items with ease. But a latent-diffusion system cannot because it can never exceed the limitations of its Training Images

Part of the complaint here is that AI is stealing human jobs. More specifically, that AI is improperly copying art to make big datasets, and using those datasets to spit out art that previously required paying a human artist to make. Plaintiff is arguing that AI art output is only as good as the input image data in its training set. But at the same time, Plaintiff is contradicting its big theme by admitting that AI is not a good substitute for human art.

The complaint shows this image as an example of how bad AI is at drawing “a dog wearing a baseball cap while eating ice cream”.

Obviously, I fired up Midjourney and punched in “a dog wearing a baseball cap while eating ice cream”. I immediately got this good boy (12/10):

Anyway, the complaint ends this section with a good catchphrase:

(100) Though the rapid success of Stable Diffusion has been partly reliant on a great leap forward in computer science, it has been even more reliant on a great leap forward in appropriating copyrighted images.

The Defense: Transformative Use Is Fair Use

Defendants will likely argue that copying a huge image dataset is “transformative use” and therefore not infringing. For example, google famously copied every book, and then successfully argued this was a “transformative use”. Authors Guild v. Google (2nd Cir. 2015). The court held:

Google’s making of a digital copy to provide a search function is a transformative use, which augments public knowledge by making available information about Plaintiffs’ books without providing the public with a substantial substitute for matter protected by the Plaintiffs’ copyright interests in the original works or derivatives of them.

The Stability defendants might argue roughly the same thing. They are just making digital copies of billions of images to augment the public knowledge, and not create a substitute for the original artworks.

Plaintiffs will argue that AI art generators are absolutely creating substitutes! And Plaintiffs can cite to a handful of recent cases for support: AP v. Meltwater and Fox News v. TVEyes. In both of these cases, the defendants copied plaintiffs news articles (or videos) to create searchable databases. Both courts decided that creating this type of search engine is not a transformative use; it’s a direct substitute for the original news product. That is, people were buying the search engine instead of buying the original news from AP or Fox. The Plaintiffs in our AI case will argue the same thing: these AI art generators are not transformative, they are creating art that is a direct substitute for the original human artist’s work.

The “transformative use” issue is the heart of the debate. Professor Mark Lemley recently argued that copying data for use in machine learning data sets is (or at least should be) fair use. However, Lemley notes that the more the AI output tends to substitute for the original art, the weaker the fair use argument becomes.

Defendants will also lean on a 1992 case called Sega v. Accolade. In that case, Accolade copied some Sega code to they could build video games compatible with the Sega Genesis. Sega sued, and Accolade argued that it only copied the code as an “intermediate step” to understand the unprotectable “ideas and functional elements” of the code. (Copyright does not protect ideas and facts). The court agreed, and ruled this type of copying was fair use. Copying something as an “intermediate step” to access the unprotectable ideas and functional elements is fair use.

Defendants will argue that this is exactly what they are doing: copying billions of images is an intermediate step - running them through a machine learning model to extract the unprotectable ideas. They are only copying a picture of a tree to understand the idea of a tree. Plaintiffs will argue the reverse: that AI models don’t just copy ideas, they copy artistic expression. Plaintiffs have a good argument here, since the output of these models appear to be artistic expression.

On balance, I think the AI defendant’s have a slightly stronger argument. Copying art to create an AI training data corpus seems like fair use. But it’s a close call, and there are still a lot of facts and legal issues to hash out.

Please tweet questions at me, and I’ll try to update this post to clarify any confusing points. @ericladler.

Some links

Links that tend to favor Plaintiffs’ (human artist’s) position:

Plaintiffs’ summary of the case

Artist Sarah Andersen’s tweet on the subject of AI art. Sarah explains why she’s unhappy with AI models copying her art style.

Invasive Diffusion: How one unwilling illustrator found herself turned into an AI model, Andy Baio, Nov 2022.

Links that tend to favor Defendant’s (AI models) position:

Artists file class-action lawsuit against Stability AI. by Andres Guadamuz, January 15, 2023. This is an excellent summary of the complaint, with a focus on the technology issues. Guadamuz thinks the Complaint makes some important mistakes in their description of the machine learning technology.

Fair Learning, by Mark Lemley and Bryan Casey, Texas Law Review, 2021. The law professors argue that fair use should be expanded to allow most types of data collection for training machine learning models.

Artists Attack AI: Why The New Lawsuit Goes Too Far, by Aaron Moss, Jan 23, 2023.

Using AI Artwork to Avoid Copyright Infringement, by Aaron Moss, October 24, 2022.

Some more links

The scary truth about AI copyright is nobody knows what will happen next, James Vincent for TheVerge, Nov 2022.

The Trial of AI: preliminary thoughts on the copyright claims in Sarah Andersen v. Stability AI, Midjourney + DeviantArt by Prof. Ed Lee, 2023.

Do AI generators infringe? Three new lawsuits consider this mega question. , Jeremy Goldman, Jan 2023. This includes an interesting discussion on web scraping and copyright preemption.

Copyright Infringement in AI-Generated Artworks, by Jessica Gillotte, 2020. A student note discusses several relevant copyright topics.